High-quality data may be the key to high-quality AI. With studies finding that dataset curation, rather than size, is what really affects an AI model’s performance, it’s unsurprising that there’s a growing emphasis on dataset management practices. According to some surveys, AI researchers today spend much of their time on data prep and organization tasks.

Brothers Vahan Petrosyan and Tigran Petrosyan felt the pain of having to manage lots of data while training algorithms in college. Vahan went so far as to create a data management tool during his PhD research on image segmentation.

A few years later, Vahan realized that developers — and even corporations — would happily pay for similar tooling. So the brothers founded a company, SuperAnnotate, to build it.

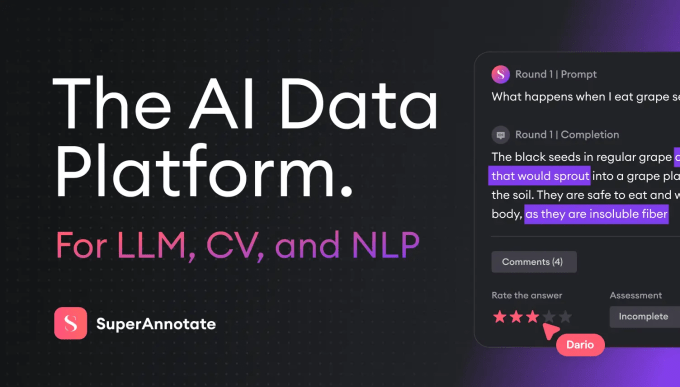

“During the explosion of innovation in 2023 surrounding models and multimodal AI, the need for high-quality datasets became more stringent, with each organization having multiple use cases requiring specialized data,” Vahan said in a statement. “We saw an opportunity to build an easy-to-use, low-code platform, like a Swiss Army Knife for modern AI training data.”

SuperAnnotate, whose clients include Databricks and Canva, helps users create and keep track of large AI training datasets. The startup initially focused on labeling software, but now provides tools for fine-tuning, iterating, and evaluating datasets.

With SuperAnnotate’s platform, users can connect data from local sources and the cloud to create data projects on which they can collaborate with teammates. From a dashboard, users can compare the performance of models by the data that was used to train them, and then deploy those models to various environments once they’re ready.

SuperAnnotate also provides companies access to a marketplace of crowd-sourced workers for data annotation tasks. Annotations are usually pieces of text labeling the meaning or parts of data that models train on, and serve as guideposts for models, “teaching” them to distinguish things, places and ideas.

To be frank, there are several Reddit threads about SuperAnnotate’s treatment of the data annotators it uses, and they aren’t flattering. Annotators complain about communication issues, unclear expectations, and low pay.

For its part, SuperAnnotate claims it pays fair market rates and that its demands on annotators aren’t outside the norm for the industry. We’ve asked the company to provide more detailed information about its practices and will update this piece if we hear back.

Edit: A few hours after this story was published, SuperAnnotate sent this statement via email: “About eight months ago, during a period of rapid scaling, we encountered challenges in maintaining clear communication with some annotators working on our projects. As is sometimes the case during rapid growth, a few process gaps emerged. We took this feedback seriously and have since made improvements to both how annotators interact with the platform and communication processes.”

There are several competitors in the AI data management space, including startups like Scale AI, Weka, and Dataloop. San Francisco-based SuperAnnotate has managed to hold its own, however, recently raising $36 million in a Series B round led by Socium Ventures, with participation from Nvidia, Databricks Ventures, and Play Time Ventures.

The fresh capital, which brings SuperAnnotate’s total raised to just over $53 million, will be used for augmenting its current team of around 100, for product R&D, and for growing SuperAnnotate’s customer base of roughly 100 companies.

“We aim to build a platform capable of fully adapting to enterprises’ evolving needs and offering extensive customization in data fine-tuning,” Vahan said.

TechCrunch has an AI-focused newsletter! Sign up here to get it in your inbox every Wednesday.